Kevin Murphy (00:00)

Welcome to What Matters Most, Sands Capital’s podcast series in which we explore some of the trends and businesses that are propelling the pace of global innovation and changing the way we live and work today and into the future. Today, I’m pleased to welcome back Daniel Pilling to the podcast.

Kevin Murphy (00:16)

Daniel is an expert on all things semiconductor, and in his first appearance, in Episode 1 of this season, Daniel took us through the world of semiconductors via the lens of a company called Entegris. That Entegris episode became wildly popular, and Daniel was then featured on the Business Breakdown podcast. So if you haven’t given those podcasts a listen yet, please do. They provide a wonderful base of knowledge, and Daniel does an incredible job of breaking down something as complex as semiconductors into user-friendly analogies and simple concepts. So needless to say, we’re thrilled to have Daniel back today. So, Daniel, thanks for joining us.

Daniel Pilling (00:50)

Thank you, Kevin. It’s great to be here.

Kevin Murphy (00:53)

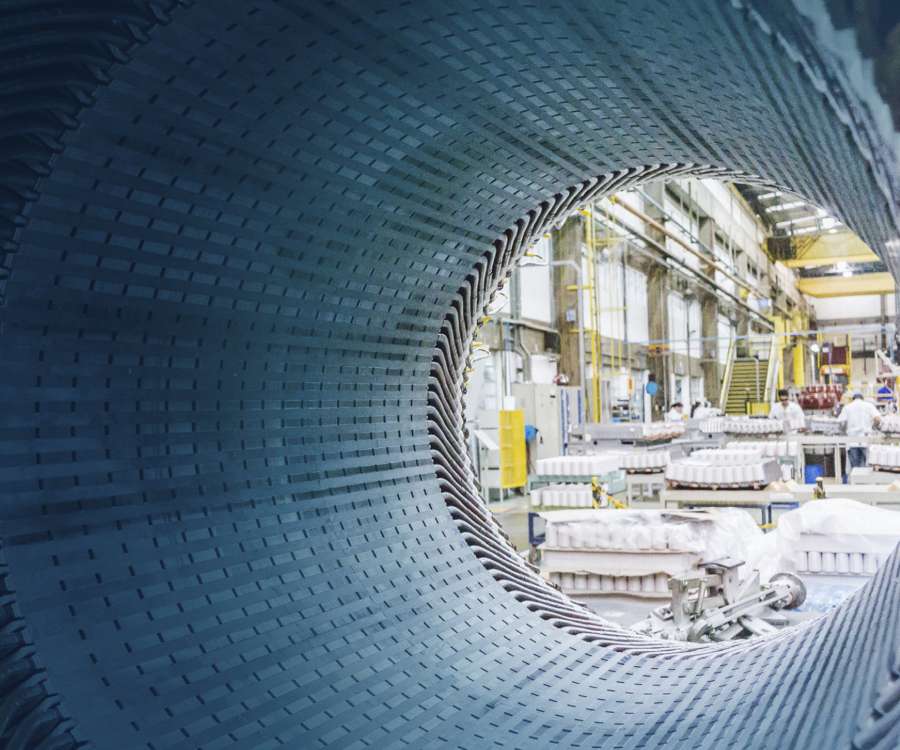

Great. Well, so our topic today is a company called Cadence, which is a software provider to the global semiconductor industry that provides two invaluable services. The first is analog and digital chip design tools. So think of that as what Excel is to a financial analyst or CAD—or computer-aided design—is to an architect or an engineer.

The second offering is electronic design automation, or EDA. So EDA tools, as I understand them, are extremely complex algorithms that take the functional design of a chip and translate that design into the real world governed by physics and manufacturing rules that need to be adhered to when placing transistors on a chip and then verifying the viability of that design before millions of dollars are spent to manufacture them.

So this is going to be an analogy-rich podcast, I think, because that’s the best way for me to understand this stuff. So I’ll start with one, and you can tell me I’m way off base, or you can agree or disagree. But I think of EDA or the design and the EDA functionality. The design is a beautiful loaf of bread. EDA tools are then the recipe for how to combine the flour, water, yeast, leavening time, oven temperature, etc. that will result in that best loaf of bread before you mess up your kitchen. So it’s really kind of a design and then proof of concept through EDA. Am I even on the right track there?

Daniel Pilling (02:17)

I think so, Kevin. I think what we could add is like this. You’re kind of designing the most complicated bread ever.

We could use another analogy, I suppose. We could think about, imagine you had 100 billion Lego pieces that make up your bread. That’s your recipe. You need to optimize them to put them together in a certain way. If you don’t get to do that, the bread will not work, and it’s going to be poisonous—every single one. And you’re going to lose a lot of money designing this bread.

Kevin Murphy (02:48)

Got it, OK, good, that’s helpful. We’ll dive into the details here, too, but before we do, for those who didn’t listen to the Entegris podcast or who may be new to the semiconductor industry, can you just give us a brief overview of the semiconductor manufacturing process? Entegris kind of sits at the beginning and throughout that process, but explain exactly how a silicon wafer goes to the chips that we have in our devices today.

Daniel Pilling (03:15)

Yes, I mean, so I like to think about it sort of as a three-legged stool. And if you can imagine a three-legged stool has to have all its legs because otherwise it will not stand. And there are three parts to it. There’s Cadence, which is, as you noted, an EDA company—an electronic design automation company that helps to design the chips.

Then there’s something called semi-capex [capital expenditure] equipment companies. So these are companies that design the equipment that’s actually used to manufacture these chips in the real world.

And then there are the foundries, i.e., the Taiwan Semis [Taiwan Semiconductor] of the world, maybe Intel in the future, Samsung. And then all of those have to really work together to make it happen.

And so basically, you have to imagine if you’re a semi-capex equipment company such as ASML, you manufacture lithography equipment. You will have to talk to Taiwan Semi to better understand what is required, what do you need in the next five, 10 years, and here’s our roadmap, and we compare and contrast.

And then Taiwan Semi—and actually ASML too—they have to merge that into the Cadence software because if that’s not around, then nobody can design any chips. So all of those three companies effectively—and anybody else involved—they have a five- to 10-year roadmap that has to be interlocked together. Otherwise, it would not work. And so Cadence and its one big competitor—there is only really one, so it’s really two companies involved. They are absolutely pivotal for this industry, being one of those three legs.

Kevin Murphy (04:50)

OK, so if I’m thinking kind of again, you know, in terms of analogies, would it be fair to say that, you know, you have these kind of great ideas that these computer engineers or design engineers will come up with? Think of it as like the architect who doesn’t know anything about the physical world and wants to build a cantilevered roof that extends several hundred meters off the edge of a building. Is Cadence maybe that reality check for that design that says, well, you may want to do this, but the physical reality of it is that you can’t. Or, in order to do that, you have to, I guess, enlist the help of engineers, cement companies, rebar, that kind of thing, in this order to make that actually happen.

Daniel Pilling (05:34)

Yes, that’s a great way of thinking about it. So maybe to provide a bit more detail. Yes, I mean, so if you want to design, let’s say, an AI [artificial intelligence] chip or a smartphone chip, so-called leading-edge chips, you’re going to have to place tens of billions, sometimes even a hundred billion-plus transistors on a single chip. That’s a big number.

And within that, there are obviously physics rules, i.e., there are various ways that these transistors can react with each other, which may mean it will not work. There might be heat dissipation issues, there’s going to be electromagnetism, etc., etc., etc. A lot of very difficult physics problems.

And then so you may have a great idea of what you want to do, but you need the software to do two things. One, design something that actually works and verify it. And then, two, place those transistors into a file that then can actually be manufactured. And so you may have great ideas and all, but I think the software will help you actually to enable those ideas. And some of those ideas may just not work out.

Kevin Murphy (06:34)

So again, just going back to basics here, maybe take us through the evolution of the semiconductor design process. Starting back with simple, I guess they were cathode ray tubes, on-off switches, and then became etched circuitry, I think, on a piece of wood back then.

Walk us through that. I mean, when did we start tilting into the world of physical limitations to what you can do on a transistor? And maybe kind of put it in the context of Moore’s Law.

Daniel Pilling (07:06)

So I think there are a few important things to know about this. One, I mean, we’ve been on the path from Moore’s Law for, I think, more than 50 years now. And there are two aspects to it, right? There’s one, this idea of the doubling of transistors in a certain amount of time. The other part of it that doesn’t get talked about so much anymore is actually also that the cost per transistor goes down at the same time, too.

Kevin Murphy (07:29)

Can I, let me jump in there quickly, because doubling transistors, I think, am I right, the challenge is not just doubling the number of transistors on a wafer, but the fact that the wafer size has stayed the same. So you’re trying to get more transistors on the same real estate, as opposed to, you know, you could double the number of transistors by doubling the size of the wafer, but that isn’t really what’s going on, is it?

Daniel Pilling (07:50)

Absolutely, I mean, so compute is size constrained. The best example might be the smartphone. So we cannot increase the size of a chip within the smartphone significantly. So all you can do actually is to reduce the size of the transistor, put more transistors within the smartphone.

Which then basically means you have more, for lack of a better term, brain cells effectively, which then means the smartphone can do more things in the same amount of time. I.e., more apps, more stuff that we humans like to do, HD [high-definition] pictures, watch Netflix, etc., etc.

So you cannot really do the opposite, though. You cannot increase the chip. I mean, theoretically, you could, but then our phones would start increasing in size, and nobody would buy them, A. And then, B, actually, I think that’s the even more important reasoning here. If you were to double, let’s say, the size of the chip, it would become twice as electricity intensive, which then means that your phone would probably have only half the battery life of it versus what it has today. Whereas if you shrink the chips, the electricity required per chip goes down.

So actually, you’re kind of OK in terms of your total budget constraints in terms of electricity demand. So you’re increasing whatever the phone can do while it’s not increasing at the same time the battery requirements, which obviously perfectly sort of explains what Apple has done over the past 15 years with the iPhone. It became much better, while at the same time actually, batteries got better, which helped us. And whereas the chip itself actually got better in terms of electricity requirements per transistor—much better.

Kevin Murphy (09:30)

OK, so take us through then, just stepping back. I think that the space between the transistors back in the day was measured in centimeters, and then it moved into millimeters. Now we’re talking about nanometers. So give us some idea of how to visualize that, how to think about how we’ve moved into these small tolerances.

Daniel Pilling (09:52)

So a few things. One, in the past, I think that whatever brand names they had for the nodes was actually a real measurement within the transistor. And then, you can measure different parts. Like Intel would measure something, Taiwan Semi, et cetera. They all had little, but now it’s become actually more marketing names. So when we talk about two nanometer, it probably doesn’t mean that much actually on a transistor level.

But we can give a decent analogy here. So if you take a human hair and you were to compare and contrast it to a leading-edge transistor, it is a tiny, tiny fraction of that hair. I mean, you would be able to fit thousands of transistors into the size of a human hair today. And then going forward, there’s a roadmap of at least five to 10 years to continue shrinking, as we have today on the path of Moore’s Law.

So that’s going to become way, way smaller again. Hopefully, that gives some sort of sense of how small these things are.

Kevin Murphy (10:49)

Yes. And so as they get smaller, they start bumping up against the laws of physics. And I’m trying to get us to go back to Cadence. How does Cadence factor into all this?

Daniel Pilling (11:01)

Yes, I mean, so a few things. So when we think about the laws of physics, there’s … I suppose interesting things happen when you shrink the transistors. What is a transistor at a high level? It’s like a switch. It’s a one or a zero. That’s binary, yes, so. And what you want to do is when you develop these chips, you want to make sure that whenever you want it to be a one, it’s going to be a one. When you want it to be a zero, it’s going to stay a zero.

Now, the problem is: When you shrink, you can have a few things happening. You can have … the one tends to be an electrical charge. So the electrical charge can jump from one transistor to another, making the other transistor a one without it actually supposed to be that. This is called quantum tunneling.

There’s also something called parasitic capacitance, where, again, you know, the charge A may impact charge B, and you don’t want that. So actually, what happens here is that the complexity goes up tremendously to build the transistors, in terms of: You have to go from, for example, planar to 3D architectures that insulate the one much better than before. Now, so you have two things happening. The transistor itself is getting much more complicated, A, and then B, you have way more transistors on the chip.

And then, if you think about what EDA does or what Cadence does, then, is to say, well, OK, here’s a chip designer. I’m going to help you place these billions of transistors onto a chip because, let’s face it, a human cannot do that anyway. So I’m going to give you an engine of physics rules, of electromagnetism rules, heat dissipation rules. I’m going to give you a list of functional rules in terms of this is what the chip is supposed to do.

So these are some sort of design hints I’m going to give you. I’m going to package all this up into a software. And then whenever you’re ready, designer, I’m going to start helping you in doing that. So I’m going to implement it for you in a way that is going to make it work. So that’s what EDA helps you to do.

And I mean, I cannot prove this, obviously, but based on what we hear, we’re told at least this is either the most complicated or at least one of the most complicated software pieces that humanity has written.

Kevin Murphy (13:04)

OK, excellent. And the end goal is to reduce or eliminate error rates within the manufacturing process before you even start to invest a dollar in that process. Right?

Daniel Pilling (13:14)

Yes, absolutely. I mean, so designing chips has become a game of hundreds of millions of dollars of investments. I mean, designing an AI chip is incredibly expensive, both in terms of people and software. And then also, if you want to go to Taiwan Semi today and you tell them, Taiwan Semi, I want capacity,

they will not even look at you if you don’t have a track record, frankly. A. And B, if you cannot give them a chip that doesn’t work because they’re going to lose capacity that they could have given to somebody else. So it’s called a tape out. When the chip is done and designed, and the blueprint, so to speak, is sent to Taiwan Semi, that has to work. Otherwise, it’s going to be a significant problem both in terms of cost and future capacity requirements within Taiwan Semi.

Kevin Murphy (14:00)

Well, so you mentioned AI chips. Maybe we’ll talk a little bit about that, too, in terms of just the exponential increase in complexity around these chips, right? It’s not static. We haven’t hit a plateau where chip design is what it is and won’t improve or become more complex. So how does Cadence adapt to that? They sell the software of prewritten rules in physics and engineering. But those rules are changing as we learn more about how to do this. How does Cadence adjust to that?

Daniel Pilling (14:37)

Yes, I mean, so a few points. Let’s initially go back to the three-legged stool idea—this idea that they all have to work together. So when Taiwan Semi comes out with a new node, they will have talked to Cadence for years about this already. And then they will give them certain sets of rules that are required to actually design on that node, and that gets directly put into the Cadence software suite. So that’s one way how they adjust to the new reality, because the new reality is a new node, which is going to have new types of complexities.

The second thing that then happens on the actual designer front, so to speak, there are a few, but maybe one I would highlight in particular. So as your chip, let’s say, goes from 10 billion transistors to 100, let’s say that took five, seven years, something like that. What happens is that the verification of that, in terms of compute needs and difficulty, is not linear, but it’s exponential. And coming back to the Lego example, if I have four Lego pieces, I think the permutation you can have is two to the power of four, something like that, which probably, sorry, I mean 16 or so, yes. If you have 10 billion, and you have to do two to the power of 10 billion, it’s sort of infinite.

So this is a really exponentially difficult problem to do, which then means if you’re Cadence, that means a few things. One, your software has to improve; it has to get faster and better. But two, you also start selling more licenses. Because if you’re NVIDIA or whoever is designing these chips, you cannot just hire more people to do the simulation part to verify these things. You’re still going to have a certain number of people—let’s say it’s 10—and instead of buying 10 licenses for those people, they’re going to buy 100 because they’re going to run 10 things in parallel, testing all the stuff to make sure it actually works. So I think it’s a multipronged approach, right? You get designed in by Taiwan Semi, your software has to get better, and you actually have to sell more licenses, which is a nice problem to have for Cadence.

Now, I will say one more last thing. Generative AI and its impact are probably most heavily felt in chip design. Because actually, it’s a phenomenal tool. It’s called generative AI. And effectively, you are generating a chip. So it’s a phenomenal tool. We’ve done calls on this, and we’ve heard quotes saying the chip designer becomes two to 10 times more productive with generative AI. Because, for example, in terms of simulating the outcomes, generative AI can be super helpful with that. Instead of running 10 simulations, you can run 1,000 because you don’t need people involved anymore. They can run it, and you just find the best one. That’s incredible, right? That also means the chips get better.

Kevin Murphy (17:15)

So that leads me to ask, would generative AI disrupt Cadence’s business? Would generative AI be an alternative to EDA?

Daniel Pilling (17:26)

Yes, I mean, I cannot give you a 20-year view, but I can give you a five- to 10-year view, I think. So I would think about it like this. So Cadence is the physics and design rules and engine surrounding chip design. So generative AI cannot replace that, right? Because these are incredibly complex rules that all have to work together. They’ve been written over decades.

What generative AI can do, though, it can take these rules and simulate and then apply them without a human in the loop, effectively. So basically, what generative AI actually does is it takes the software and runs it and multiplies it many, many times over. But it cannot replace the particular piece of software. And, as I said, this is one of the most difficult things we humans have written.

So I would argue that before gen AI can replace this, many, many other things would get replaced. So for now, our thinking is that this is really a very big positive for EDA on the one hand or Cadence. It’s actually, if I may say. It’s also a very big positive for the dominant chip design companies.

And if I may give an example of, if I’m NVIDIA, I can use this to increase the sort of the—sorry for the play on words here but—to increase the cadence of my chip design from two-and-a-half years to one year, which basically then means I can outrun my competition by having chips out much faster than the other players because I have the scale to do that because you have to pay way more to Cadence to get more licenses.

Kevin Murphy (19:00)

So since you mentioned NVIDIA, who are some of the other Cadence customers? Who else would be using this software?

Daniel Pilling (19:08)

Everyone. And it goes across the entire industry. There are two big players in this that have the entire suite. There’s a third player that does some parts of it. So it’s a very, very consolidated industry, which wasn’t the case 20 years ago, and it’s sort of the same playbook across all of semiconductors. The industry consolidates because the R&D intensity is going up.

So you have a few big players doing this, and that’s it. Now, in terms of the big customers, it’s going to be NVIDIA, Intel, MediaTek, Broadcom, Texas Instruments on the analog side, ADI on the analog side, Marvell, any big chip design company, big or small, all the startups, and, actually, I should also mention, interestingly enough, big companies like a hyperscaler or a Tesla. They all started designing their own chips, too, and they’ve also all become big customers. So actually, your customer TAM [total addressable market], you might say it has been increasing in the past few years because more and more people are designing their own chips or trying to at least.

Kevin Murphy (20:13)

Excellent, yes, so that’s an interesting way of thinking about how the overall pie is expanding but also the number of slices within the pie. The number of people coming up with different ways of doing things is also growing. A lot of food analogies in this podcast today.

Daniel Pilling (20:27)

Can I add one more slice to it? Maybe the size of the pie is going on top or something. So I think, so you’re right. I think there are maybe three things.

One, you have more people designing chips.

Two, the complexity is going up, as we discussed. That means much more verification, for example, is required.

And three, which I genuinely think is a new thing and it’s going to impact them significantly as well, is this idea of the increase in cadence effectively that I just mentioned with NVIDIA. Because what’s happening now is we are sort of in this race of AI chips are really important. We have NVIDIA, and that company, in our opinion, is really fairly dominant in that space. But you have everybody else trying to do it too. And now, NVIDIA is increasing the speed of chip design, which means everybody else will do it too.

And you know, if you want to increase the speed of something, you’re going to have to do the same amount of work instead of two-and-a-half years in one year. And that’s great news for Cadence because that means, again, you have to buy more licenses. And we’re just starting this now, right? NVIDIA is only starting this today. I would think their competitors, whoever’s trying at least, is going to do, they will do the same over time. So basically, you have three sort of nice little growth drivers here increasing the pie.

Kevin Murphy (21:49)

Yes, and so the semiconductor industry is notoriously cyclical in certain areas. Now, we can talk about different cycles or super cycles on top of that, but it’s cyclical. Does Cadence, are they caught in that cycle as well?

Daniel Pilling (22:02)

Yes, less so. And it’s actually interesting. The market knows that and tends to reward it effectively with a higher valuation because of that. Now, whether that’s right or not is debatable. So Cadence is a software company. And the key driver for this business is basically R&D spending by the big chip designers.

Now, the big chip designers have really favorable margin structures. So it means that even if you have a down cycle in terms of their end markets, they know that in a year’s time, two years’ time, whenever it might happen, the market will come back, and their competitors will keep on designing. So that means actually that R&D spend is much, much less cyclical, which is a significant benefit to Cadence, and effectively, their business becomes way less cyclical.

And then the market really likes that and rewards it with a slightly higher valuation. So the bottom line: It’s a much less cyclical business.

Kevin Murphy (22:58)

And it’s a software company that I’m going to guess is a subscription-based model. Is that accurate?

Daniel Pilling (23:05)

Yes, it’s a mix. I mean, so they sell licenses, but effectively, de facto, it’s a subscription-based business model. I guess the beauty is you re-engage with your customers on a fairly repetitive basis. And then, whenever they start designing a new chip on a new node, they will have to buy new licenses. So you’re going to sell more. And then, to your point, those are purely subscription. So you’re going to get paid no matter what.

Kevin Murphy (23:31)

Yes. And going back to the customer base that you described, it sounds like it’s everything from leading-edge chips to commodity analog chips. So we described at the beginning of this conversation a lot of the value they add on the leading-edge side, increasing complexity, dealing with physical and mechanical limitations to what you can do. On the less complicated side, what’s the end goal? Is it increased throughput? How are they adding value to the Texas Instruments of the world?

Daniel Pilling (24:01)

Yes, so a few things. First of all, there are certain shades of gray there, right? But even on the so-called trailing edge, those nodes will get improved, not necessarily by shrinking, but by making them, for example, better in terms of power consumption. So for example, yes, like, and this is not maybe a Texas Instruments example, but if I look at Taiwan Semi and their 28-nanometer node, that’s been around for years, for a long time, certainly not leading edge anymore. But every year, Taiwan Semi still improves that node and improves the electricity profile of a single transistor within that node to whatever use case requirement that might be. Texas Instruments, ADI, all these companies will do something similar there as well on the trailing edge, depending on how old the nodes are.

So Cadence will help you with that. Now the second thing is, actually, so I think you might want to think of Cadence with an analog more a little bit of as you might think of Bloomberg for financial workers. So as you know, the Bloomberg UI [user interface], it looks a bit like from the ’80s. The reason why it was never changed is, basically, people got used to it.

And the same thing happened here, right? So Cadence is the dominant analog EDA company. EDA designers within the analog space are decently old, actually on average, and might have been working with this product for 20 to 30 years. So they’re used to the UI experience, and they will never actually get off.

So there are really two benefits here. One, you know this tool, and you know how it works, and it would cost a tremendous amount for you to retrain. And two, there’s still work being done on these older nodes, too, both in terms of improving them but also actually in terms of designing new chips.

And I should have mentioned this, too: Analog is a growing space. We went from thermostats with nothing inside to the [Google] Nest, but we’re going to have sensors everywhere in the world. You’re designing a lot of new chips on the analog side, which also helps, then again, Cadence because you’re selling more licenses for that.

Kevin Murphy (25:58)

And you kind of answered this, but maybe flesh this out a little bit more. It would seem to me, once a chip is designed, and it’s in the marketplace, and it works—not leading edge, not innovative, but a chip that does what it’s supposed to do—how often, other than kind of power consumption, and maybe that’s part of it, but how often do the designers go back and kind of re-architect the thing? Is it years? Is it months? I mean, how often do you have to actually revisit that?

Daniel Pilling (26:24)

Yes, so it depends, but I’ll give you numbers. If it’s a consumer product, every year. So the iPhone is going to have some new sensor in it every year. If it’s an industrial product, it might be 20 years, literally 20 years. If it’s an automotive product, it’s going to be five to eight years. So it depends.

Now, if I may say as a side comment: The companies that get designed into these five- to 20-year products, like Texas Instruments, they have incredible margins on that, right? Because once they’re designed in, the OEM [original equipment manufacturer] will not design you out, and you just keep on producing those.

Now, the positive though for Cadence is this idea of saying, well, OK, on the consumer side, you get redesigned all the time, but actually, even more attractively on the industrial and automotive side, we’re only starting. Right, the average car today has very few sensors, has almost no digital logic inside. And we know in 10 years, it’s going to look way different. It’s the same in industrial—even more so. The robot stuff is only starting to happen in the past decade, and we’re nowhere near satiating this. So you’re going to have a lot of growth in terms of designing new chips for that space.

Kevin Murphy (27:32)

Yes, and that’s actually a good point. We did a podcast on Keyence, which speaks to that. I think when people think of factory automation, they naturally just think of robots, robotic arms replacing workers. But there’s so much more to it. The sensors, the scanners, all the things that make sure the manufacturing process is running as expected.

And they aren’t complicated robots. It’s just a camera with a chip in the back of it, in some cases, or a thermal sensor making sure things aren’t running too hot or too cold. So yes, that’s a great point. And that’s just, again, even that’s in early innings. So it sounds like a lot of growth ahead, both at the kind of the simple level and then in the leading-edge design level.

Let’s talk a little bit more specifically about the company Cadence itself. Give us an idea of its history and the management team. Who’s running this company?

Daniel Pilling (28:22)

Yes, so the company was started in the ’80s and has gone through a significant amount of mergers and acquisitions history. So have its competitors, where effectively they all started in one small part of the chip design and ultimately acquired or grew internally everything else. So today, you have two companies really doing the entire suite.

Now I’ll tell you two things that attracted us particularly to Cadence. One is the management teams in this industry are all phenomenal. I like … so I’m not trying to say the other competitor doesn’t have a good team, but they do. They’re all fantastic, just fantastic.

What we thought here about Cadence is, I mean, their CEO Anirudh [Devgan] we met multiple times. For lack of a better word, I mean, he’s incredibly bright. He’s actually an engineer himself. He studied at the Indian Institute of Technology, which is obviously one of the best unis in the world. He has this reputation of just being a brilliant, brilliant engineer. That’s pretty cool. He’s not a manager. He’s actually an engineer himself. I think that permeates the company, where most people running things at Cadence are actually very knowledgeable in terms of the tech.

Now, the second thing that I thought was a really good show of force for the quality of this management team is a second act they envisaged for the company. Whereas they said, today, we’re an EDA company, right? So we design and help you design chips. In the future, though, the packaging of these chips is going to become more important.

And they’re absolutely right. Which basically, let me give an example. So when you design an AI chip, there’s going to be a lot of heat dissipation. A lot. So the way that you package this and the way that you actually design the thermals and the heat dissipation and whatever electromagnetism you may have is actually becoming more important. This is not a core sort of chip design thing. It’s more a physics around the chip. They’ve done that too. They’ve started that six, seven years ago. Actually, that was recently proven to have been the right thing because their competitor Synopsys is buying a business called Ansys, which we actually used to own at Sands Capital, that does the same thing. But they’re just seven years later, right? So whereas Cadence today already has a suite of doing this, and this is actually integrated, Synopsys is going to have to take a few years to sort of integrate the two if this all goes through. So I thought that’s interesting, huh?

But again, I want to just really make this clear. This is a super high-quality management team in an incredibly high-quality industry, and actually, the other company also has a really high-quality management team.

Kevin Murphy (30:55)

Yes, but sometimes being able to envision the future in a kind of a unique or slightly different way might give you an edge. It sounds like that’s what Cadence is all about.

Excellent. Well, let’s flip to, I will call them the negatives, but the risks. What is a pothole that they could inadvertently step in?

Daniel Pilling (31:13)

Yes, I’ll give you one industry-specific, right? And maybe they don’t have that under control. And I’ll give you one company-specific.

So the industry-specific one goes like this. Each time we go to a so-called new node—I mean, each time Taiwan Semi comes out with a new node, whether it’s three-nanometer, two-nanometer, whatever comes next—the cost per wafer goes up by about 20 percent to 25 percent. And a wafer is basically this round disk of silicon that is used to manufacture the chips, so the chips are made out of that. That also means that the cost per chip goes up by the same amount as long as the size of the chip doesn’t change. Now, maybe the best way to see this is the iPhone chip, the brain of the iPhone, has gone up in cost by about five times over the past decade. From $20 to $100 plus.

Now, if you’re Cadence, you thrive on complexity, right? You thrive on this idea that we’re going to have the next node, and because it’s very complicated, you sell more licenses, etc., etc. The key risk is that the cost goes up too much and that we don’t have a use case, right? Because the cost goes up every time by 20 percent, at some point, maybe you don’t have a use case. Now, the reason why we’re comfortable with this is like this. The semiconductor use case has always outrun the cost. Right? I’ll give you an example.

We did the survey a while back about automotive cars and how much people would want to pay for a self-driving vehicle a month. And it turns out they would want to pay a lot. So just this idea of saving an hour of your day to do whatever you want is really attractive. Now, that’s going to cost a lot in terms of chips, right? Thousands of dollars. That’s just one use case. Another use case might be gen AI is helping to design NASA spaceships. Super high value.

So what I’m trying to say is that the cost going up is OK because the use case is going up. And so I’m pretty comfortable with that, but if the industry slows down, that would not be good for their growth at all. And that would be a big risk. But they don’t have that under control, right? Because they’re playing as part of the three-legged stool, you’re just one part of it.

Kevin Murphy (33:20)

Yes, that’s the environment they operate in. But so far, it’s been positive. You mentioned a while ago that there’s a name for that. It’s a paradox, am I right?

Daniel Pilling (33:30)

That’s right. Yes, so it’s called the Jevons Paradox, which is that this researcher a couple hundred years ago noticed that as the price of coal being mined went down, the total volume in terms of dollars sold actually went up, and that was surprising, right?

So the way to think about this is that an NVIDIA chip, the next generation, is going to be five times better than the previous generation. Five to 10 times. We don’t know, right? They will charge you, again, hypothetically, double for that, but that still means that on a per-compute basis, it’s much cheaper for you, right? So that means the cost is actually going down at the same time your use cases are exploding and you’re selling more AI chips. That’s exactly what’s happening. So there’s Jevons Paradox, maybe a bit more complicated fashion than coal, but it’s been working, I mean, as we can see with NVIDIA, right, in the entire industry.

Kevin Murphy (34:25)

Great, OK, so that gives you comfort that risk is there but less likely to materialize, just given the dynamics of the industry.

Daniel Pilling (33:35)

You know, actually, honestly, in fact, I love that risk is there because this is the so-called wall of worry of semiconductors. And I’m just not worried about it. I mean, I should be. I look at this every day, right? I mean, no doubt. I’m not dismissing it. But it gives us this massive opportunity to buy these companies that always have a wall of worry. But effectively, the wall of worry is a long ways away. So we can create a lot of value by that because the market factors in that wall of worry.

You will see If you Google this, you will see so many articles discussing this—the issues, the costs, etc. But if you dig in, it just doesn’t matter right now. That means these stocks usually are underpriced for the growth they have.

Actually, if you like, I’ll give you another example, which blows my mind. In the ’80s, a stepper lithography machine from ASML was like $50,000. Today, it’s like $200 million, and we’re still buying them. If anything, the equipment today is probably going to generate a better return on investment if you’re Taiwan Semi or whoever’s buying this thing than the one in the ’80s.

Now, if you then say, well, we just talked about AI chips, what about those? Well, they’re costing tens of thousands of dollars today. Maybe they’ll cost hundreds of millions of dollars in the future because one of those chips is going to be so much better and drive so much ROI [return on investment]. Maybe that’s just the same track again now. And so anyway, so we have a lot of confidence that this is not a risk that’s going to transpire.

Kevin Murphy (36:05)

Yes, well, I cut you off when you were describing Moore’s Law, too, which was kind of the inverse of that. The price per transistor, or price per compute, comes down as the distance between the semiconductor or the circuit shrinks.

But on that same note, thinking of Moore’s Law, one of the conversations out there in the industry is that there is a limit to Moore’s Law, and that’s where you start getting into the need for quantum chips or something. Because at some point you can only put atoms so close before you basically break the laws of Newtonian physics and have to switch into some more quantum realm. Does Cadence, are they planning for that? Are we too far out in the future to even think about that? Or would they be involved in the testing and design of quantum chips?

Daniel Pilling (36:56)

I mean, the answer is to be seen. Quantum would be a new paradigm, right? And it would significantly change everything for all players. Now, I’m going to give you a bit of a roadmap there in a second, but I would say one thing. If quantum computing is likely to be in the center, then the edge is still going to be silicon because you can’t carry a quantum computer in your, you know, within your smartphone. And there could be a good argument to be made if the center gets massively accelerated that, actually, you’re going to have significant growth at the edge, right? As we discussed, for example, if AI gets much better, which is trained in the center, that proliferates to way more edge applications. So we’ll see. This might not be even a negative, right?

But now, in terms of the roadmap of things, so we’ve done a tremendous amount of work on quantum computing, and as far, humbly, as far as we can tell, that still seems to be a long way out in terms of it making an impact on this industry. And the semiconductor paradigm is here to stay for a long time.

And, if I may say on the Moore’s Law thing, so we go to these industry conferences all the time. One big theme I have noticed for a long time is when somebody, an engineer, really bright people, if they present at these industries, they usually will not present on something that they’ve solved. They will usually present on some big problem that needs to be solved. And then when you listen to the problem, you think, wow, this will never get solved. But when you go there again the next year, this problem has been solved and completely forgotten. And there’s always seemingly some sort of solution. It’s incredible, it really is incredible. So that pertains to the wall of worry a little bit too. Now, at least in our opinion, you have a 10-plus-year roadmap on Moore’s Law as it is today. We could talk about that too.

But then I think the key thing you want to look at is the end of Moore’s Law is going to look like this. We’re going to go from scaling the transistor to actually stacking transistors. So this has happened in memory, right? Where instead of having flat structures, we went from a flat city to a city with skyscrapers. That’s going to happen in logic, too, at some point. When that happens, you know that actually, OK, scaling is over, and we’re going to start stacking. But that actually might be still even really positive for the industry in general, too, because that’s really complicated as well. But that’s sort of the telltale. We’re far, far away from that. I mean, this is probably 2035 or so.

Kevin Murphy (39:28)

Yes. Why hasn’t logic started stacking yet?

Daniel Pilling (39:32)

Yes, so it’s an issue of cost and heat dissipation. So if you want to start stacking, you have to … You know, these things get pretty hot, so you’d have to have some sort of clever way of dissipating the heat, which requires new materials that aren’t there yet. And Taiwan Semi, for example, presents on this a lot. It’s called a 3D IC [integrated circuit], potentially. And again, it’s far out.

So the cheaper way of doing things is to keep on scaling via lithography means and via 3D means per transistor. So the transistor itself is moving from being rather planar to becoming 3D, and the reason for that is like this: If you can insulate that gate, that also means that you reduce the issues of parasitic capacitance and quantum tunneling, which we discussed in the beginning, right? It’s like effectively the same. We insulate cables, right, because we don’t, well, probably we don’t want to get hit by electricity, but also the electricity can’t come out. So you’re doing the same thing here, but that requires a 3D structure. So this is the better way for now, yes.

Kevin Murphy (40:36)

OK, that’s excellent. That’s very optimistic. I like hearing that, you know, problems are presented, and problems are solved. So that’s great.

So Daniel, last time you were on the podcast, we got some requests for some information about you. You’re clearly very knowledgeable about this industry, but maybe give us a little bit of your investment history. You know, where did you start to become interested in this, and walk us through your background.

Daniel Pilling (41:01)

Thank you, Kevin. I mean, so I’m originally from Germany, as you may hear from my ever so slight accent. So I was interested, I mean, I’ve been interested in stocks from a pretty early age. I got … I was fortunate my father, in particular, was an avid sort of private stock market investor, and then he shared some of those things, and I just saw, wow, these things are going up, and they’re super interesting to analyze. And he gave me some magazines to read, and so since then, it never sort of left me. And coming from Germany, I then went to study in the U.K. English-speaking world, and sort of careerwise, it was actually not too dissimilar.

So when I started on the so-called buy side, I was at Fidelity and various hedge funds, and I managed to join Sands Capital about six years ago now. And one interesting element was actually when I started, it felt like nobody really wanted to do semiconductors because it was cyclical.

And it didn’t have all these exciting growth opportunities as today. AI wasn’t even an idea. AI was only in the head of Jensen [Huang] from NVIDIA. Literally, he was the only guy who saw it. Everybody else was looking at cyclical industry, fairly low growth, maybe a bit of growth from smartphones, etc. So nobody was really that interested. And so as life happens sometimes, I just happened to do it.

And then, as I said, I was fortunate to move to the U.S. from the U.K. I was very fortunate to join Sands Capital to do something I’m really, I’m genuinely very passionate about. I invest in the long term doing deep-dive research, hopefully with some sort of, you know, differentiated view and great companies. And, so since then, the industry has gone through this inflection, and we’re very heavily invested in it. Yes. And I have to say, all of it has been a tremendous amount of fun—probably the most important thing actually for me personally.

Kevin Murphy (42:57)

Yes, oh, thanks, that’s a great background.

And I’m thinking: People who say they cover the semiconductor industry, but that’s really all they can say about it, are definitely riding that cyclical roller coaster. And I think they’re stock traders for the most part. I think what is unique about you, and I think fits really well with Sands Capital, is you can use the industry as the backdrop, but then look for that intersection of change and innovation within the industry to find the real gems within there.

I think back to one of our first investments in this space. Well, Intel, I guess, was one of the first. But after that, it was Qualcomm. And we all learned, as much as anybody wants to know about code division multiplexing and how data is transferred over airwaves and then reassembled back on your iPhone through these chips that Qualcomm was selling. And if you just thought, well, I want to invest in mobile telephony, you’d probably end up with a basket of Nokia and stuff like that, not realizing that the real engines of innovation and growth are the chips within those devices, and Qualcomm owned the patent on the most powerful, best chips out there. And you’re carrying on that legacy and, I think, bringing a lot more rigor and sophistication to it than we ever had at the firm.

Daniel Pilling (44:20)

Yes, it’s been great, a lot of fun too. I mean, maybe you could say that somehow NVIDIA is the only company that managed to do both. They’re sort of the chip designer, but they’re also creating their own market, sort of very unique from that perspective. So but yes, it’s been great. It’s a good industry.

Kevin Murphy (44:36)

Yes, for sure. Any other thoughts you want to leave us with about this company in particular or the industry generally?

Daniel Pilling (44:44)

Yes, I mean, I want to maybe provide a sort of a hypothesis, if I may. And it relates to AI, but it also then relates to sort of what they are doing. So one can think of AI sort of as a … sort of as something that’s obviously improving significantly. And as it improves, you could think of it sort of as an IQ sort of improving over time.

And I guess as what happens, I suppose, when an IQ improves, you can do more things, more complicated things. And you can sort of think about it like a curve that goes to the right up. So maybe if an algorithm has an IQ of, totally making this up, right, like let’s say 50, it can do X, but then if it, once it gets to 180, all of a sudden it can develop drugs or whatever it might be.

I think the point I’m trying to make is that, as this happens, your addressable market literally explodes. It’s just like what happened with the smartphone or the iPhone, I should say, whereas the hardware improved massively, and that meant you had an explosion of applications that actually work on the phone.

Maybe an example. I bet a Snapchat app would not have worked 15 years ago due to compute constraints. Today, it’s no problem. Now, the ramification of this is like this. You’re going to have chips in everything. Because everything is going to become smart, and for Cadence, that’s phenomenal. That means not only are you going to design really powerful AI chips to train the stuff to run the inference, but also you’re going to have chips everywhere in the world that actually run either inference via the cloud or on device. So if you think about volume growth for chips, this is going to be tremendous, and it’s really exponential. We don’t know what it’s going to look like.

But we can take the example of the iPhone App Store and say, this is really interesting. It’s exponential. The market can’t grasp it. I mean, we’re trying to grasp it, right? And I don’t think we fully grasp it. But when something like this exponential shows up, I think you want to really, really invest behind it with significant weights, which is what we’ve done.

Kevin Murphy (46:55)

Yes, yes, that reminds me actually of something you brought up the other day. It’s something I was thinking about in terms of just the miniaturization of technology. And thinking back to the first cell phone, I worked on a political campaign, and the candidate carried one of the only cell phones that we’d ever seen in a briefcase. And, you know, when we get to the airport, they plopped the briefcase on the tarmac, open it up, pull it up the antenna out of it, and make a call. And then they ended up in cars. And now they’re in our pockets and on our wrists, that kind of thing. And you showed me the other day the [Apple] Vision Pro, which looks sleek, looks a lot better than the [Meta] Oculus, from just an aesthetics perspective, but it’s big and heavy. And it’ll get smaller. And solving that problem is going to be the purview of chip designers.

Daniel Pilling (47:45)

Yes, I mean, I agree. I mean, it’s … So I joke sometimes among the tech team, if you would have gone back to ’99 and basically drawn out the curve of Moore’s Law and think through what can these chips do when X, Y, and Z happens in seven to eight, nine years, I think you could have probably seen something like the iPhone. I mean, you wouldn’t have known what it looks like. But you could have forecasted the compute power within the iPhone with very high accuracy.

Now, the same thing is happening here. Absolutely. I totally agree with you. The same thing is going to happen with these Vision Pro glasses. They’re going to get much, much better, which means they’re going to shrink. The battery power is going to improve, and the content is going to be astonishing. I mean, we’re just starting. So we can literally today, you and I, we could sit down and draw a curve of saying what’s the compute going to be within these glasses in the next five to 10 years? And then we could guess what happens. We probably would be wrong. But I can tell you, it’s probably going to be really cool.

Kevin Murphy (48:49)

Yes, yes, for sure. Yes, excellent. Well, this has been really interesting. I could go on for another couple hours, but thanks for taking the time to walk us through this. I think everybody who listens to you learns something every time you talk about a topic. So it’s great to have you on to cover this one in particular. And we’ll leave it at that. And thanks for joining.

Daniel Pilling (49:11)

Thank you, Kevin.

As of February 29th, 2024, Entegris, ASML, Taiwan Semiconductor, NVIDIA, Google, Keyence, Netflix, and Texas Instruments were held in Sands Capital strategies. No other companies mentioned were held in any Sands Capital strategies at the time of recording and were mentioned for illustrative purposes only.

The views expressed are the opinion of Sands Capital and are not intended as a forecast, a guarantee of future results, investment recommendations, or an offer to buy or sell any securities. The views expressed are current as the episode date and are subject to change. This material may contain forward-looking statements, which are subject to uncertainties outside of Sands Capital’s control. The securities identified do not represent all of the securities purchased or recommended for advisory clients. There is no assurance that any securities discussed will remain in the portfolio. You should not assume that any investment is or will be profitable. A company’s fundamentals or earnings growth is no guarantee that its share price will increase. For more information, including a full list of portfolio holdings, please visit our website at www.sandscapital.com.